I was recently invited to join a panel discussion among developers to dispel the myth of the typical BS Buzzword Bingo around machine learning and AI. In this blog post, I will share some buzzwords we talked about with a little description and links. Ooops, I already used some buzzwords. So let’s start.

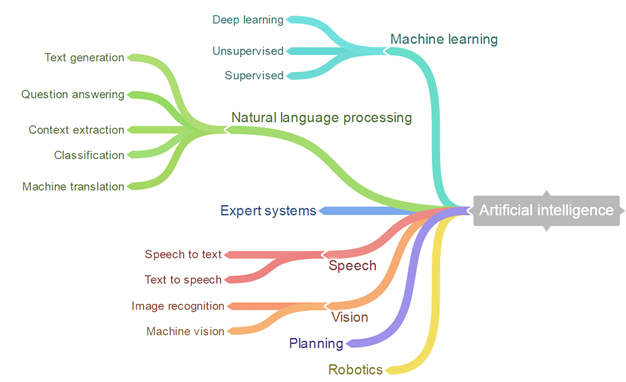

AI (Artificial Intelligence) is the magic portion to fix all problems of all companies and will make us unemployed in the future. Well, this is how it is often sold. For me, AI is computer science mixed with statistics that enables a machine to do things better, faster and more efficient. Most people understand things like machine learning, robotics, and computer vision as AI.

I found this nice visualization of different areas or AI with again some nice buzzwords. Some people might argue that the tree could look different, but as a first overview it is quite nice.

source: https://hackernoon.com/hn-images/1*SQC439dWhYeJ31ph9Oesqg.png

Machine learning

ML (Machine Learning) is often divided into 2 different types of models: Supervised models, where you try to predict an outcome, that you can observe. For example you want to predict whether a customer will like a certain product or if a picture shows the image of a dog or a cat. Unsupervised models try to find patterns in the data. E.g. you want to group customers into segments. Deep learning can entail both, supervised and unsupervised components. The basic idea of Deep Learning is to imitate the functionality of our brain. In deep learning, you create an artificial neural network with multiple layers (therefore we call it “deep”) that is similar to the neurons in a brain. A stimulus is then sent through the network and interpreted at the end. You cannot really understand how and why, but it works. For me, deep learning is just a generic term for this type of model, which is often supervised and more and more unsupervised.

Since there is so much buzz about deep learning, we also discussed Generative Adversarial Networks (GANS), because they produce quite impressive and entertaining results. Generative Adversarial Networks are a special class of combined models that interact with each other to produce somewhat creative results. Ever seen those tools, where you can just throw in a photograph of yourself and get returned an image that looks like you were drawn by van Gogh? No, you should try it then, e.g. on deepart.io. GANS are also the models that create deepfakes like Obama’s public service annoucement on youtube or the appearance of princess Leia in Rogue One. The model learns what a source face looks like at different angles and transposes the face onto a different target.

We also talked about how to build deep learning models. You might have come across the two biggest frameworks “Tensorflow” and “Keras” in the buzzword jungle.

-

Tensorflow is an open source deep learning framework powered by Google. It’s often used, well documented and you can build all kinds of deep learning models in a very flexible way. Tensorflow also offers tooling to facilitate the work e.g. Tensorboard, which visualizes neural networks.

-

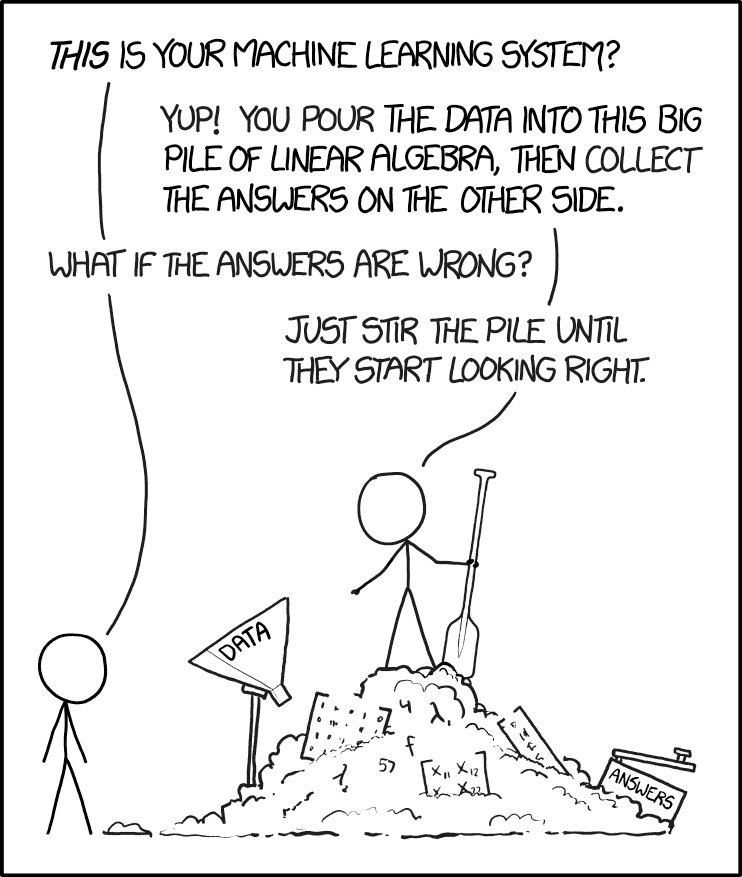

Keras is another well known deep learning framework. It’s one of those frameworks that use lot’s of abstraction to make it easy to use even if you have no idea what you are doing.

Natural Language Processing

NLP sounds like Neuro-linguistic programming but in our context stands for Natural Language Processing. The objective of NLP is to read, decipher, understand, and make sense of the human languages in a manner that is valuable. This could for example include extracting and classifying entities in a text.

Especially for NLP tasks, it is common to use pretrained models and finetune them for specific tasks (Transfer Learning). Usually, some big corporation such as Google or Facebook trains a model (usually some neural net) on a large corpus of data with unlimited computation resources and open sources the model coefficients. Smaller companies would not have the data or the capacity to train such models from scratch and can just fine tune or extent existing models. Most models use the idea of deep learning and extend the basic ideas with some clever twists. Popular models are the sesame street models BERT and Elmo and their developments (RoBERTa, DistilBERT…).

One model that created a lot of buzz is GPT-3. In May 2020 OpenAI released this pretrained model with 175billion (!) parameters to selected beta testers. It was promoted as the AI of the future, which could perform multiple language tasks. The model is not just able to understand human language and write and understand text. It can also learn programming languages and make developers obsolete. A quite impressive video was posted on twitter, that showed how this model is able to write simple react code to create buttons on a website. The access to the model via API will probably be sold to companies for a lot of money in the future.

We touched a lot of buzzwords already, which should help you survive a flood of AI buzzwords. One last advice: In those situations never talk about just “data” without using the adjective “big”.